Download Executable Attachment

Sometimes we need to upload data to Redshift from SQL Server. But there is no straightforward way to do that. And we have to do the following task manually to import the data to redshift.

Sometimes we need to upload data to Redshift from SQL Server. But there is no straightforward way to do that. And we have to do the following task manually to import the data to redshift.

- Export data from SQL Server to a text file.

- Compressed the file to GZIP.

- Upload the file to S3.

- Execute copy command in redshift.

I have written a python script that does the above task. The script first read configuration from a YML file, export the SQL server data to a text file using BCP command, compressed the text file, upload the compressed file to S3, truncate the redshift table and finally execute a copy command to load the data to redshift from that file.

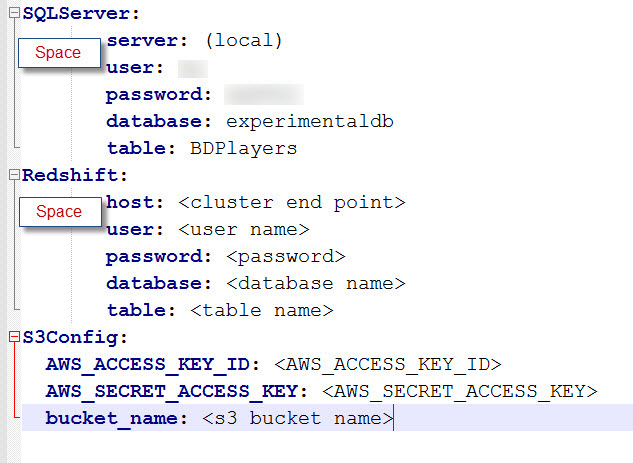

You can download the full project from github. I have also create a distributable(exe) so that one can easily run the script by just change the configuration file. Download the attachment. After extracting the attachment you can find a folder name ImportDataToRedshift and a configuration file config.yml. Change the config file by providing your own credential.

Figure: Snapshot of config.yml configuration structure.

Please give extra attention when editing config file and insert necessary space for every section. Open a command prompt and move to ImportDataToRedshift directory then run the ImportDataToRedshift.exe file by providing the full path of the config file. See the below image.

Please give extra attention when editing config file and insert necessary space for every section. Open a command prompt and move to ImportDataToRedshift directory then run the ImportDataToRedshift.exe file by providing the full path of the config file. See the below image.

Figure: Snapshot after running the executable file.

select * from stl_load_errors order by starttime desc limit 10

Using this script you can export data from any table or view of SQL Server. Just make sure that the SQL Server and Redshift table structure are identical. If you face any problem please let me know through comments.

If you do not find your expected data in the redshift table you can execute the below query in redshift cluster and investigate if there are any data loading errors.

Using this script you can export data from any table or view of SQL Server. Just make sure that the SQL Server and Redshift table structure are identical. If you face any problem please let me know through comments.

Please, could you provide the download link for the executable? The current link is dead. Thank you

ReplyDeleteHi Bravo,

DeletePlease check the download link now.

It´s ok! Thank you very much!!

ReplyDelete